On Building A Library Part Two

John David Pressman

In 2014 I wrote a post about building a library from the pile of disorganized books at my high school. In it I went over how the books were indexed by setting up a ‘scanning’ station consisting of myself and a camera. I also discussed how the books were indexed by ISBN number using a 3rd party service, and how to check the validity of ISBN numbers entered by hand or a bar code scanner. I included scripts to do these things, but left out the critical portion of what to do with the data once you have it. This is a followup post to correct this omission and finish what I started.

The library project is now largely finished and resides here. Most of the reason it took so long was that I was having trouble deciding on the proper design for it. To understand why a proper design might be non-trivial it should be explained how my school manages their computer network:

Each computer is reimaged frequently over the network, so that any 3rd party application you install is deleted unless it’s part of the network image. Moreover it would be very difficult to convince the network administrators to run a custom server solution for managing and updating the book records. After all we need to remember that our library software needs to handle:

-

Addition and removal of book entries, or at least marking books as checked out or missing.

-

Searching through the book database with enough flexibility that people can find books.

-

Detailed book lookup so that people can be sure the item is the one they’re looking for and get other information about a book that they might not know about before finding it in the database.

Requirement number one was the hardest to satisfy. It wouldn’t be impossible to ship a flat application with a static library of books, but this would eventually become so out of sync with reality as to be useless. A book library index that lasts would need to be updatable. I considered several designs for this including:

-

A peer to peer network application that would be installed on the network image and update itself over time by including options for the user to report missing books. The problems with this were the complexity of the design and the difficulty of managing who can and can’t add book entries and report a book as missing.

-

A custom server solution where the library would be maintained by a central ‘librarian’ who would have access to this software hosted on the schools internal network or an external server. The problem with this was that ultimately there seemed to be less interest than I’d want in maintaining this software and the required skillset didn’t seem to be in reach of the faculty, moreover I doubted that the network admins would install a server if I wrote one.

-

A centrally managed web application that I would write and host. The problems with this were that I would basically be taking the responsibility of keeping this program running indefinitely and become a central point of failure. If I ever decided I didn’t want to run things anymore that’d be it.

I ended up doing three. For one thing, the value of my library index is zero if it’s not available to anybody. A central point of failure is better than having never worked at all. I also realized the overlap between three and two. I’ve made my implementation open source so hopefully I can eventually convince the school to either host my solution or fold it into a larger solution they end up implementing.

I’ll explain in the rest of this article how to build basic server software for the web. The basic overview is that my software is a set of CGI scripts run by apache2 and written in python. More complicated solutions such as wsgi exist but I avoided them because this is a small, relatively simple project.

The simplicity I was looking for by using CGI scripts with a stock webserver was in fact mostly there, with a few snags. CGI scripts let you make dynamic web content without having to fiddle with frameworks or other large infrastructure pieces. They’re not particularly hard to set up and I’ll go over the configuration in apache, the core details of what a cgi script does and how it works, how to test a cgi script, and a few minor things along the way that tripped me up.

Configuring Apache

I host jdpressman.com and this site on the same server using apache’s virtual host support. This means that I host multiple domains under the same IP address.

Apache has a page in their documentation on how to use a cgi script in apache. In short the process goes that you add a script alias statement in the host definition that points to a directory on the server that is designated for cgi scripts. Every item in the directory is assumed to be a cgi script when requested by a user, and executed.

It’s important to be careful when setting the file permissions for your cgi script directory, because if you set it to the most permissive settings (read/write/execute for all users) then any vulnerability in your server becomes an easy way to inject arbitrary code into your application. Since ‘arbitrary code’ includes malware and your application is being served to dozens or even hundreds of users this is bad news.

CGI Scripts

The basic setup for a CGI script is fairly simple. A CGI script takes input from a set of environment variables sent to it by the web server and uses these to spit out a fully formed HTTP response. That is, the HTTP headers and the actual page HTML are both sent by this script to standard output. The web server then takes this response and sends it to the user. CGI scripts are executed as shell scripts, which means that by adding a shebang line to the top of the script to tell the shell what interpreter to use for the program CGI scripts can be written in essentially any programming language.

The CGI scripts I used for my library project are written in python. I knew that I wanted my software to be able to search through the database by different things such as the author or the title, I also knew that I wanted them to be able to submit a search string, I also knew that I wanted to be able to let the user specify whether they wanted results that were exactly matching their string or partially matching.

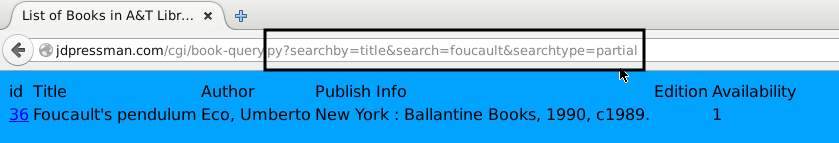

To accomplish these things, we use a web form the web form sends its data collected from the user to the CGI script in the form of a query string. You’ve probably seen a query string before without knowing what it was, so it’ll be easier to just show you one:

Everything past the question mark is the query string. If you type into a webform and submit it you’ll see that this query string is sent along with the url request. A CGI script uses this query string to determine how it should serve content to the user. For example in the query string pictured there:

searchby=title&search=foucault&searchtype=partial

The script is told to search the database by title for ‘foucault’ using partial matching. And as the screenshot shows it returns Foucault’s Pendulum as a result. In python the cgi module can be used to get the query string for a url request as part of a cgi script.

Once accessed, the query string and the response can be processed like you were writing a normal script to output some sort of data in HTML format, with the internal mechanics of how things work after the initial input being basically entirely up to the programmer. The important portion is at the end you produce three things to standard output:

-

A set of valid HTTP headers.

-

A separating CRLF token.

-

A complete HTML page.

Creating an HTML page from whatever data you have is an exercise left to the reader. Though if you’re unfamiliar with HTML as always I recommend Mozilla’s website. It’ll go over the basics of how to make an HTML page and how to use CSS to style it.

As for creating a valid set of HTTP headers, this one was tricky. Thankfully it’s not difficult to explain. Basically each line of an http header looks like this:

Content-Length: 1000

The tricky part is the line ending. HTTP headers don’t end with a newline they end with a carriage return and then a linefeed character. In python these can be printed using the escape codes ‘\r’ and ‘\n’ respectively.

So what your string should look like in python is:

"Content-Length: 1000 \r\n"

If we use a python print() statement then we’ll want to set this as the end parameter.

print("Content-Length: 1000", end="\r\n")

For more information on exactly what HTTP headers can be used in an HTTP response and more about HTTP in general, one can look at wikipedia’s articles on the subject. A minimal HTTP response can be made with just the Content-Length and Content-Type headers.

The first line of an HTTP header is usually something like:

HTTP/1.1 200 OK

In apache, this line is sent for you and you will get an error if you include it.

Debugging a CGI Script

Before deploying a CGI script to the server it should be tested on your development machine. How to do this is non-obvious because the web server passes several environment variables to the script and it can be hard to tell which are important. Thankfully this guide to debugging perl scripts on stack overflow comes to the rescue with the answer. The minimal environment variables you need to set are REQUEST_METHOD, SERVER_PORT, and for what we’re doing QUERY_STRING. For reference, when I was doing my testing the script seemed to work without including the question mark at the start of the QUERY_STRING variable.

Environment variables can be set in Linux with the ‘export’ command. (And confusingly enough, not the ‘env’ command.) How to set environment variables will vary significantly depending on what operating system you use, you can look it up using a search engine or your operating systems documentation.

As a note, the database backend I used for this project was sqlite, which I mentioned towards the end of my previous post. In order to use sqlite in a CGI script the user executing it (that is, the user your web server runs as when it fetches resources on your computer) must have read and write access on both the directory the sqlite file resides in and on the sqlite file itself. This is because the sqlite program wants to create a temp file in that directory and needs write access to do so.

That bug stumped me for a good while.

An Even More Confounding Bug

There are some errors that are just frustrating when you figure out their cause. There’s a sense of injustice at having had ones time wasted by something trivial or stupid. And then there’s some bugs that while frustrating to deal with, at the end the error is so convoluted and unexpected that you’re almost impressed with the ability of simple systems to fail in strange unexpected ways. This is one of those.

See, even after fixing the permissions problem described above for sqlite, I still couldn’t open the sqlite file. This single error bugged me for hours as I tried everything I could think of to fix it. Well, almost everything. Early on I suspected that the way I’m building the filepath for accessing the database might be causing the problem, but didn’t want to print the filepath to be sure because printing anything with HTTP headers was annoying. Besides, it already worked on my desktop so if it worked on my desktop then the filepath handling must be good right?

Well no.

As it turns out, the code I used handled generating the filepath to the database by splitting the filepath of the script being executed, which it turns out is determined internally by the filepath given on the command line arguments to python3 to run the script. So when I run it locally like this:

python3 book-query.py

The filepath for the script is given as 'book-query.py' when what I had in mind

was '/home/user/projects/library-client-web/cgi/book-query.py'. When you split

'book-query.py' you get the empty string '' and then when you append "books.sqlite"

to the empty string you do in fact get the correct filepath. By contrast when you

split the long path you get '/home/user/projects/library-client-web/cgi' and when

you append 'books.sqlite' to it you get '/home/user/projects/library-client-web/cgibooks.sqlite'.

So even though the script worked perfectly on my development machine it broke in

production.

My recommendation after that experience would be to write a simple function that

prints a string with minimal HTTP headers for debugging purposes. Perhaps it

takes a string as an argument, checks its length and then sends that with a

Content-Type and Content-Length header. Also, if one is creating filepaths in

python tears can be avoided through the use of the os.path module’s split and

join methods. These handle trailing slashes and the like for you. If one is

splitting a filepath from __file__ they should use os.path.abspath()

so that you’re guaranteed to get the full filepath no matter what is passed to

the interpreter.